- Defend & Conquer: CISO-Grade Cyber Intel Weekly

- Posts

- Session-level identity in AI-driven workflows: The new frontier of enterprise risk

Session-level identity in AI-driven workflows: The new frontier of enterprise risk

CybersecurityHQ - Free in-depth report

Welcome reader to a 🔍 free deep dive. No paywall, just insights.

Brought to you by:

👣 Smallstep – Secures Wi-Fi, VPNs, ZTNA, SaaS and APIs with hardware-bound credentials powered by ACME Device Attestation

📊 LockThreat – AI-powered GRC that replaces legacy tools and unifies compliance, risk, audit and vendor management in one platform

Forwarded this email? Join 70,000 weekly readers by signing up now.

#OpenToWork? Try our AI Resume Builder to boost your chances of getting hired!

—

CybersecurityHQ’s premium content is now available exclusively to CISOs at no cost. As a CISO, you get full access to all premium insights and analysis. Want in? Just reach out to me directly and I’ll get you set up.

—

Get one-year access to our deep dives, weekly Cyber Intel Podcast Report, premium content, AI Resume Builder, and more for just $299. Corporate plans are available too.

In 2023, the Federal Trade Commission banned Rite Aid from using facial recognition technology for five years. The drugstore chain had deployed AI-powered systems that generated false positives, disproportionately flagging women and people of color as shoplifters. Acting on these alerts, employees followed customers through stores, searched them, ordered them to leave, and called police. The FTC found that Rite Aid failed to test the technology for accuracy, monitor for bias, or properly train employees on its use. This was not a case about deceptive marketing. It was enforcement based on actual harm from uncontrolled AI deployment.

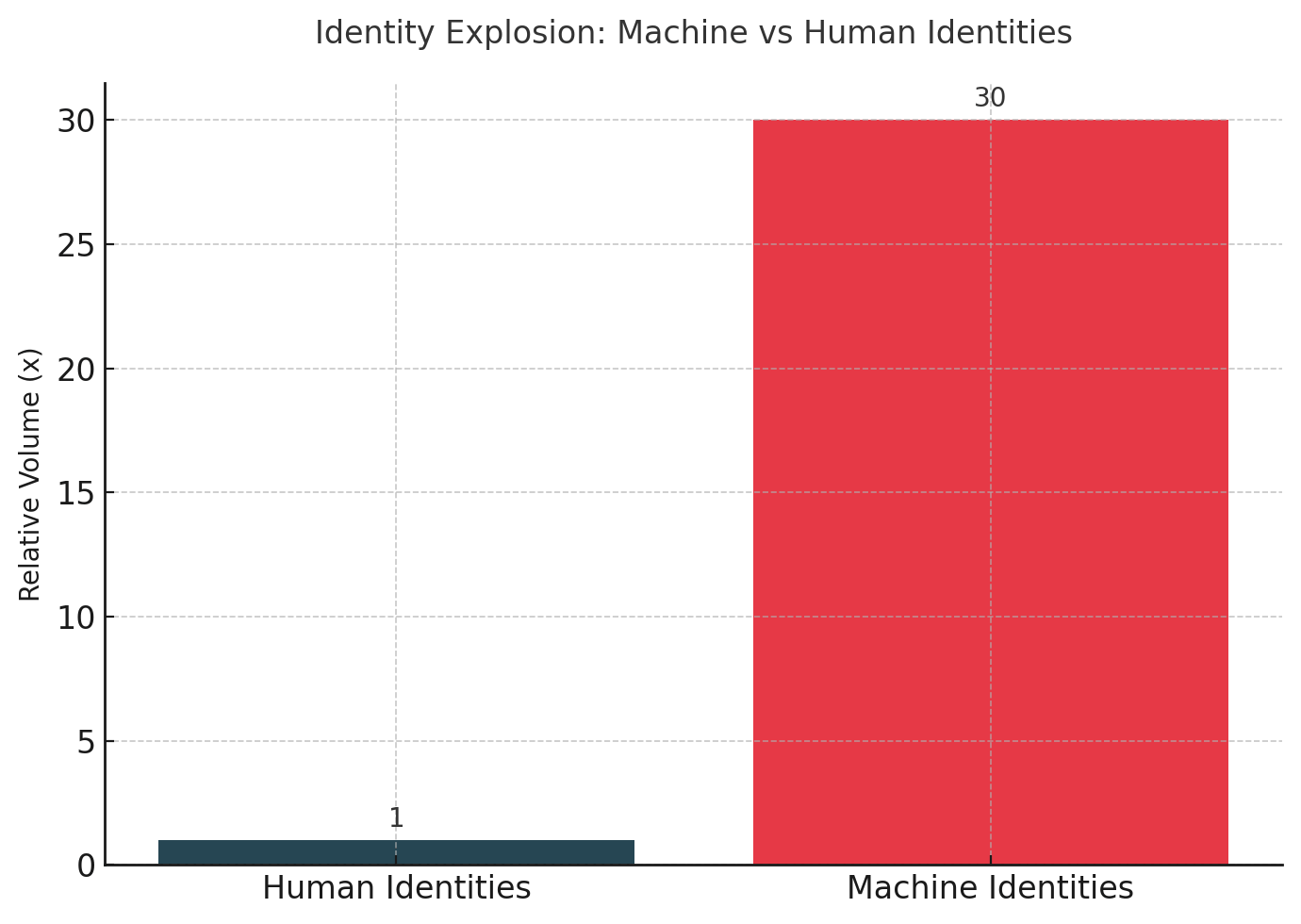

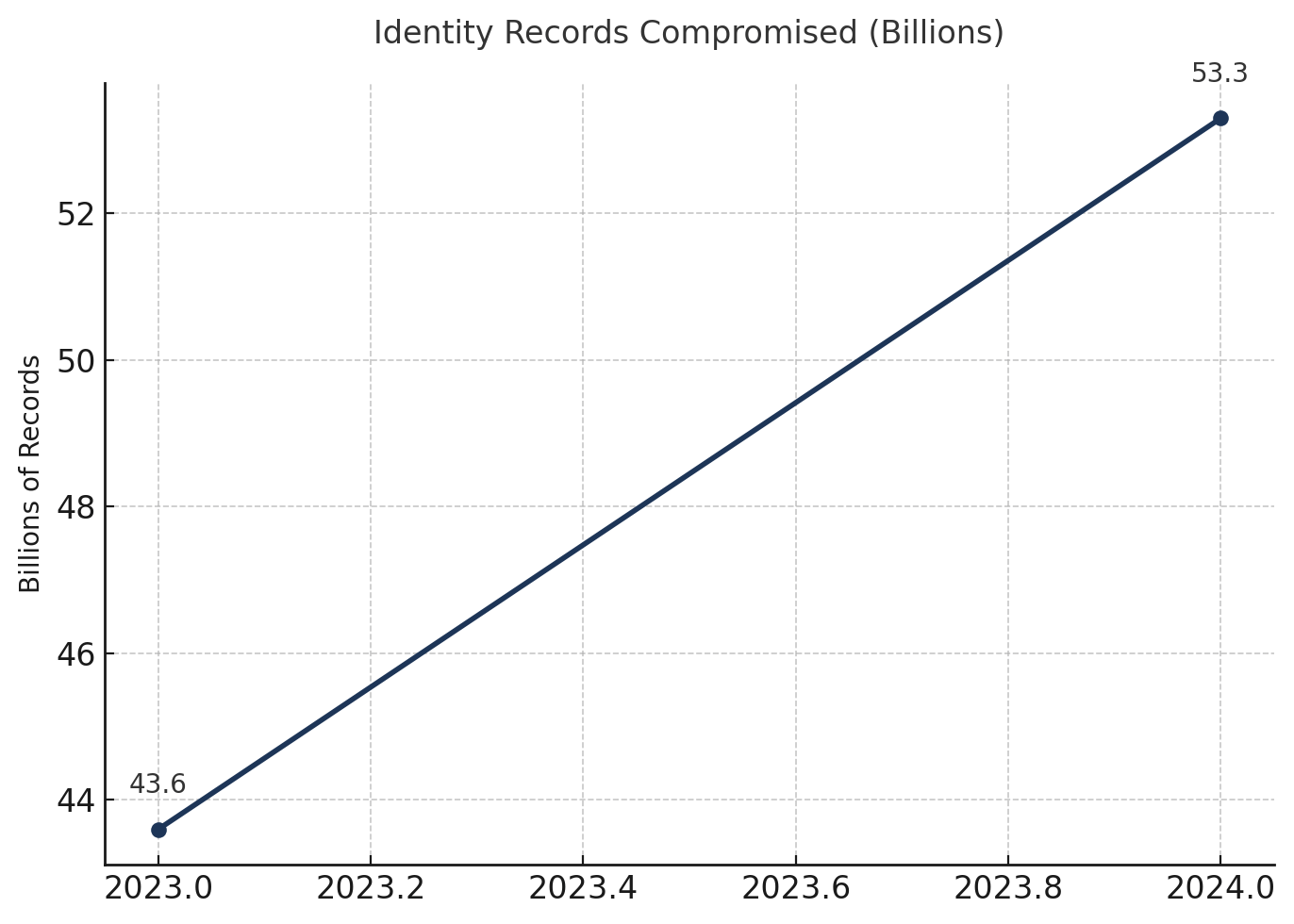

The Rite Aid case represents a narrow slice of a broader crisis. As enterprises deploy agentic AI systems that operate autonomously across their infrastructure, the identity and access management systems designed for human users are failing at scale. The gap is quantifiable. Machine identities now outnumber human identities by 20 to 50 times in most organizations. In 2024, 91% of companies experienced identity-related security incidents. Attackers recaptured 53.3 billion identity records that year, a 22% increase from 2023. Among those records: 895,802 stolen credentials specifically for enterprise AI platforms.

The vulnerability is structural. Traditional IAM operates on assumptions that no longer hold: static users, predictable access patterns, manual provisioning cycles measured in days or weeks. AI agents spin up and down in seconds, chain requests across dozens of systems, and make autonomous decisions at machine speed. The session, not the user account, has become the critical security boundary. And most enterprises have no effective controls at that level.

The Velocity Problem

Agentic AI systems generate session identities at volumes that overwhelm traditional governance. Every AI agent, script, or automated workflow brings its own credentials or tokens. Instead of managing a few thousand employee identities, organizations suddenly face managing orders of magnitude more service accounts and API tokens than human accounts.

Consider the typical identity lifecycle for a human employee. Provisioning happens during onboarding, often taking several days as IT processes access requests, assigns roles, and configures multi-factor authentication. Changes occur during the "mover" phase when roles shift, usually quarterly or annually. De-provisioning happens at termination, ideally within 24 hours but often much slower. The entire cycle operates on human timescales: days, weeks, months.

AI agents in CI/CD pipelines operate differently. An agent is instantiated when a developer commits code. It needs immediate access to version control, build systems, artifact repositories, and deployment environments. The access must be scoped to exactly what that specific build requires. The agent completes its work in minutes and should be deprovisioned immediately. The next commit triggers a new agent with potentially different access requirements.

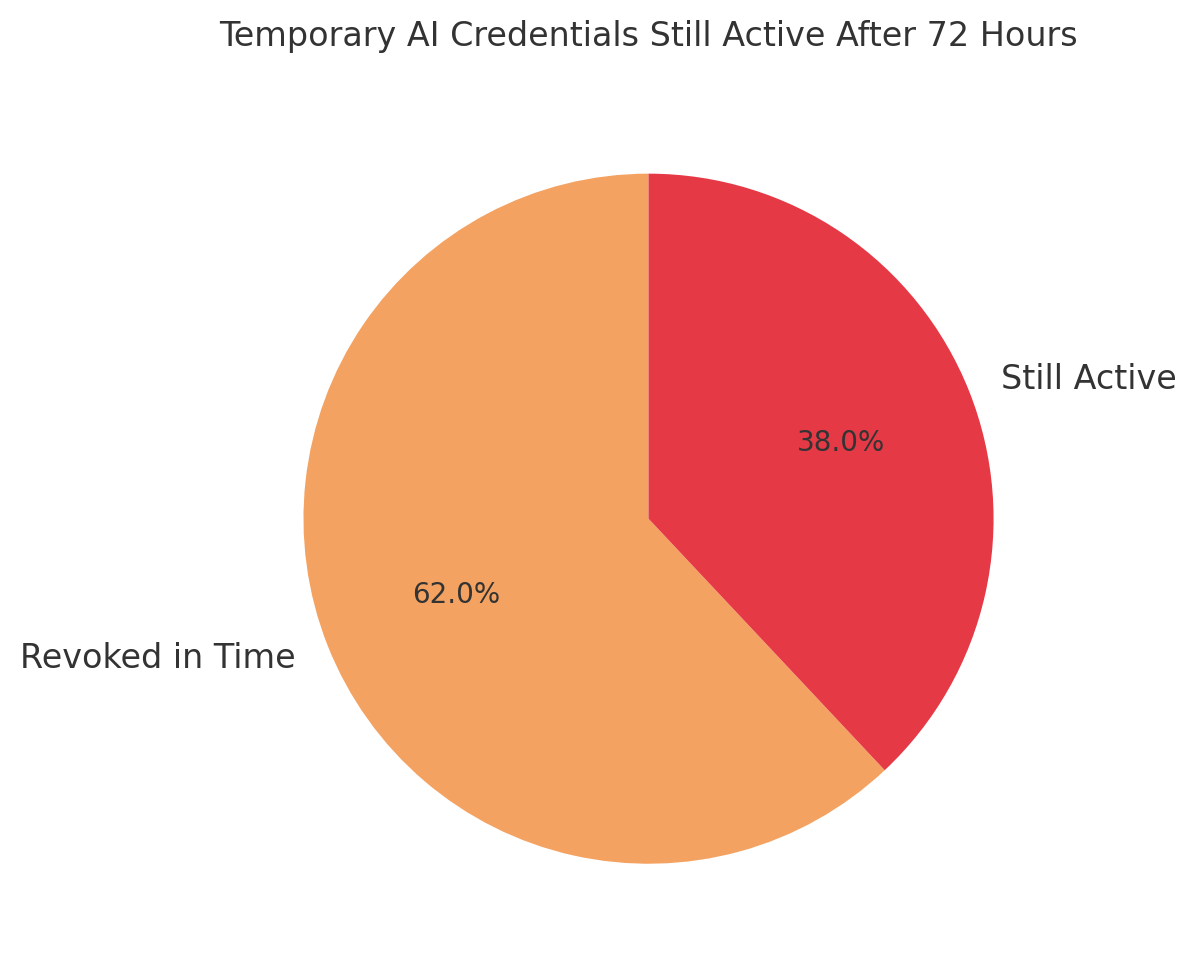

This velocity gap creates the core vulnerability. Research shows that 38% of supposedly temporary credentials for AI workloads remain active more than 72 hours after task completion. These orphaned sessions become persistent backdoors. GitGuardian's analysis found that 70% of secrets leaked in 2022 were still active in 2023, providing sustained access for attackers.

The problem compounds across the enterprise. Agentic AI systems do not operate in isolation. They coordinate with other agents, call external APIs, access multiple data sources, and hand off context between workflow stages. Each component needs its own identity and session credentials. Each handoff creates a potential security boundary.

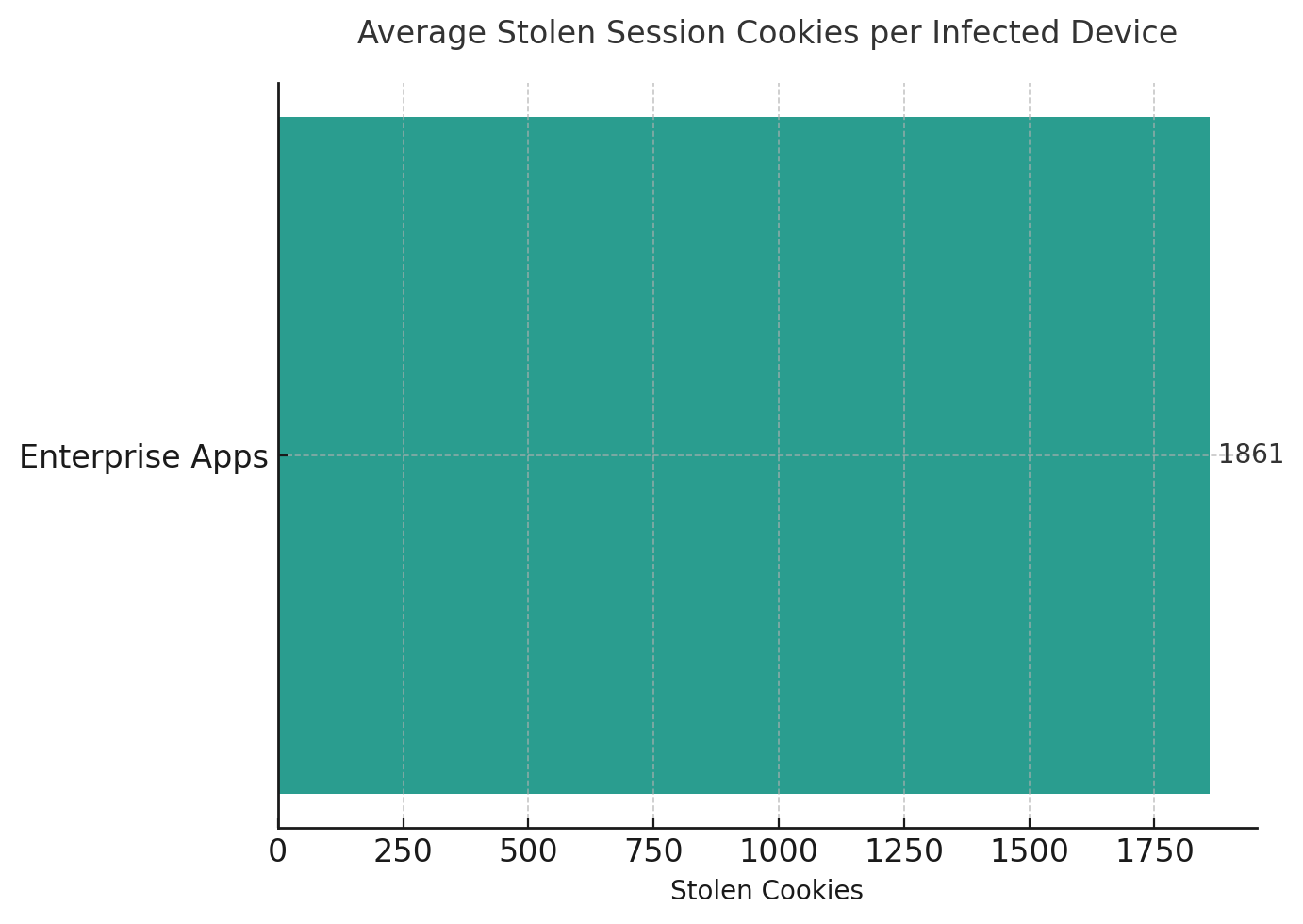

SpyCloud's 2025 report documents the scale. Enterprises now average over 300 applications vulnerable to infostealer malware that harvests session cookies and credentials. Infected devices yield an average of 1,861 stolen cookies each. That represents 1,861 potential session hijacking opportunities per compromise, many of them for machine identities that lack the monitoring applied to human accounts.

Why Least Privilege Fails at the Session Level

The principle is straightforward: every session should operate with the minimum access necessary for its specific task. In practice, achieving session-level least privilege with current tools is nearly impossible.

The technical barrier is fundamental. Most IAM platforms issue access tokens designed for human sessions measured in hours. OAuth tokens typically expire after 3,600 seconds. SAML assertions last 300 to 600 seconds. But AI agents making rapid sequences of API calls need per-request scoping, not per-hour tokens. The granularity mismatch is severe.

The second barrier is context. Least privilege is inherently context-dependent. A database query agent might need read access to financial tables during business hours for legitimate reporting, but the same access at unusual hours should trigger additional verification. Current systems lack the contextual awareness to make such distinctions dynamically. They rely on static role definitions: DatabaseReader, APIWriter, AdminUser. These roles cannot express "read table X only during business hours only when initiated by user Y for workflow Z."

The practical result is systematic over-scoping. Organizations grant broad permissions because tuning per-session access is impractical with existing tools. The architectural failure of legacy IAM systems to accommodate ephemeral needs drives organizations to adopt dangerously insecure operational workarounds: shared credentials, hardcoded API keys, or granting broad over-permissioned roles to agents purely for operational simplicity and velocity. This "borrowed identity" approach means the agent session can do anything the user can do, a far cry from least privilege. It is convenient but highly over-scoped.

The Model Context Protocol, introduced by Anthropic in late 2024 and now adopted by OpenAI, Google, and Microsoft, attempts to address this through standardized session identity. MCP mandates that every AI request ties to a real user or service account and carries context about the request chain. Over 1,000 connectors have been built. Analysts estimate that by end of 2025, over 20% of enterprise AI deployments will use MCP or similar frameworks. But adoption remains early, integration is complex, and legacy systems are unchanged.

The Attacker's Perspective

Runtime memory extraction has become standard practice among sophisticated threat actors. Once an attacker gains initial access to a system running AI workloads (through phishing, vulnerability exploitation, or supply chain compromise), they dump process memory to harvest temporary tokens, OAuth credentials, and ephemeral API keys. These credentials may be short-lived in theory, but "short-lived" often means minutes or hours, sufficient time for lateral movement at machine speed.

The CISA Zero Trust Maturity Model documents a shift in attack patterns. The path inverts from historical patterns. Previously, attackers moved from low-privilege human access to high-privilege human accounts (system administrator, domain admin). Now they move from low-privilege human entry to high-privilege machine identity. The initial compromise requires less sophistication but yields disproportionately higher risk and wider impact.

Token replay attacks now target ephemeral workloads. Despite short container lifespans, attackers maintain persistence at the identity layer by intercepting and reusing JWT or OAuth refresh tokens across sessions. Detection is difficult because the tokens are technically valid and the sessions appear legitimate in logs.

AI agent hijacking represents a sophisticated vector documented in NIST guidelines and the OWASP Agentic Security Initiative. The attack exploits the lack of separation between trusted instructions and untrusted data within the agent's processing. An attacker embeds malicious instructions in a document, email, or website that the agent ingests. If successful, the hijacked agent uses its delegated privileges to perform unintended actions: mass data exfiltration, remote code execution, or automated phishing campaigns. The logs show authorized activity by a legitimate agent. The malicious payload is hidden in the data context itself.

OWASP has cataloged this as a top risk in AI systems, identifying privilege compromise through indirect prompt injection as a critical vulnerability where misconfigurations allow unauthorized pivots. Traditional session hijacking defense (preventing token theft) does not stop these attacks. The session is never stolen. It is subverted through the AI's own processing logic.

What Frameworks Provide

NIST's Cybersecurity Framework 2.0 now explicitly addresses workload identities alongside human identities, advocating for dynamic, policy-based access controls rather than static credentials. The framework emphasizes just-in-time credential issuance with short lifespans and comprehensive logging of all machine actions.

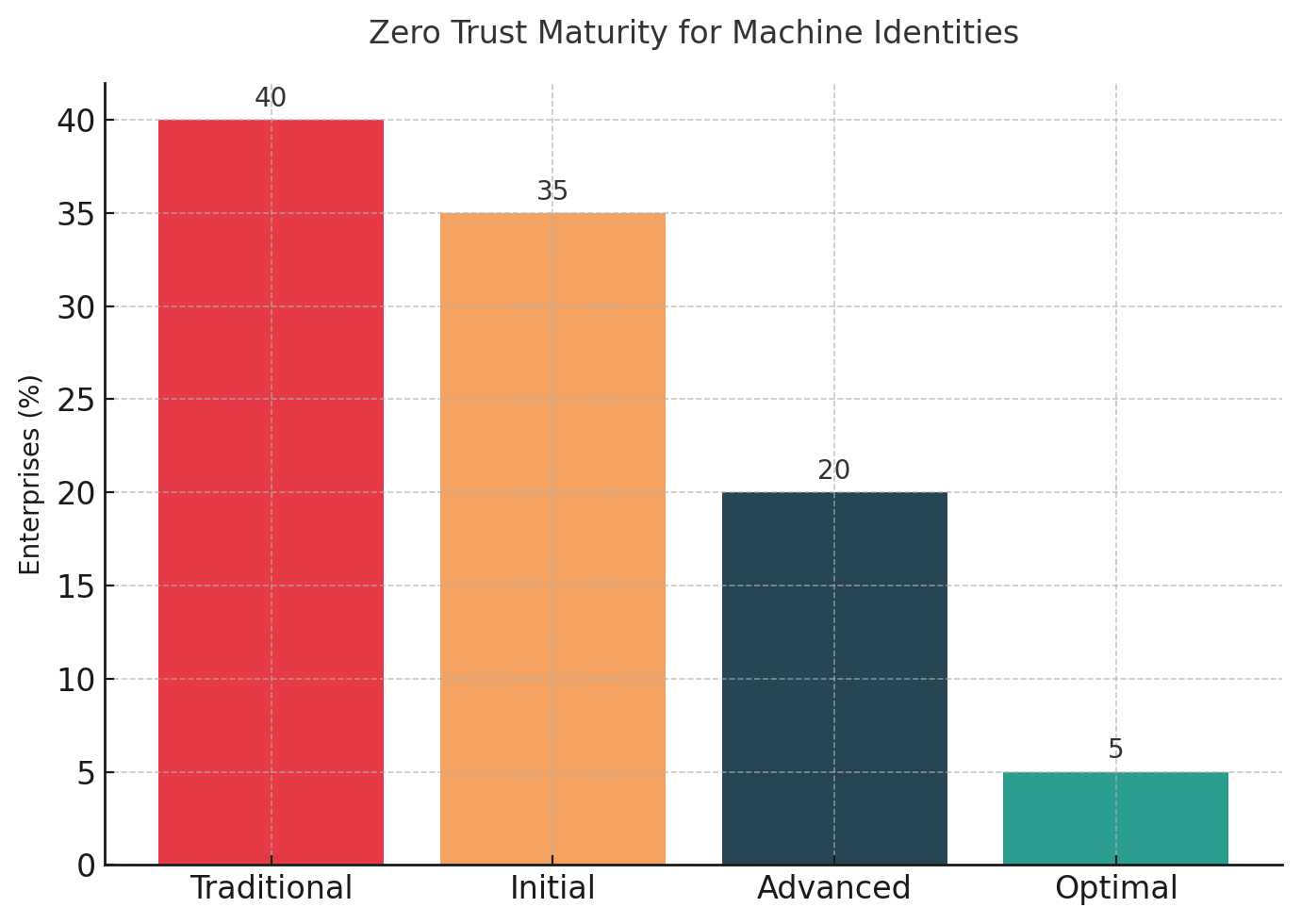

The CISA Zero Trust Maturity Model version 2.0 provides the most detailed roadmap. The model defines maturity stages for identity governance: Traditional, Initial, Advanced, and Optimal. Most enterprises operate at Traditional or Initial for machine identities, characterized by static policy enforcement, manual review, and limited automation. Optimal maturity requires complete automation of identity policies across all entities, continuous enforcement, real-time risk analysis, and dynamic rule updates.

The gap between current state and required state is substantial. Achieving Optimal maturity means automated orchestration of the full identity lifecycle (creation, credential issuance, rotation, revocation) without manual intervention. It means policy engines that evaluate every request based on real-time context: who is requesting, from where, for what purpose, at what risk level, and whether the pattern matches expected behavior. It means continuous monitoring with anomaly detection capable of identifying malicious activity that appears legitimate in standard audit logs.

Industry initiatives are emerging. The Workload Identity Management and Security Evolution (WIMSE) working group at IETF is developing standards for federated identity in multi-cloud environments with emphasis on ephemeral credentials. Cloud providers have introduced features like AWS Security Token Service and GCP short-lived service account tokens. The open-source SPIFFE/SPIRE specifications provide cryptographic workload identities with automatic rotation, enabling microservices to authenticate via constantly refreshed certificates instead of stored passwords.

But implementation lags specification significantly. The gap represents both technical debt and organizational inertia. Legacy systems often lack APIs for programmatic policy changes. Security teams lack visibility into shadow AI deployments. Business units resist the operational overhead of stricter identity controls.

What CISOs Must Do Now

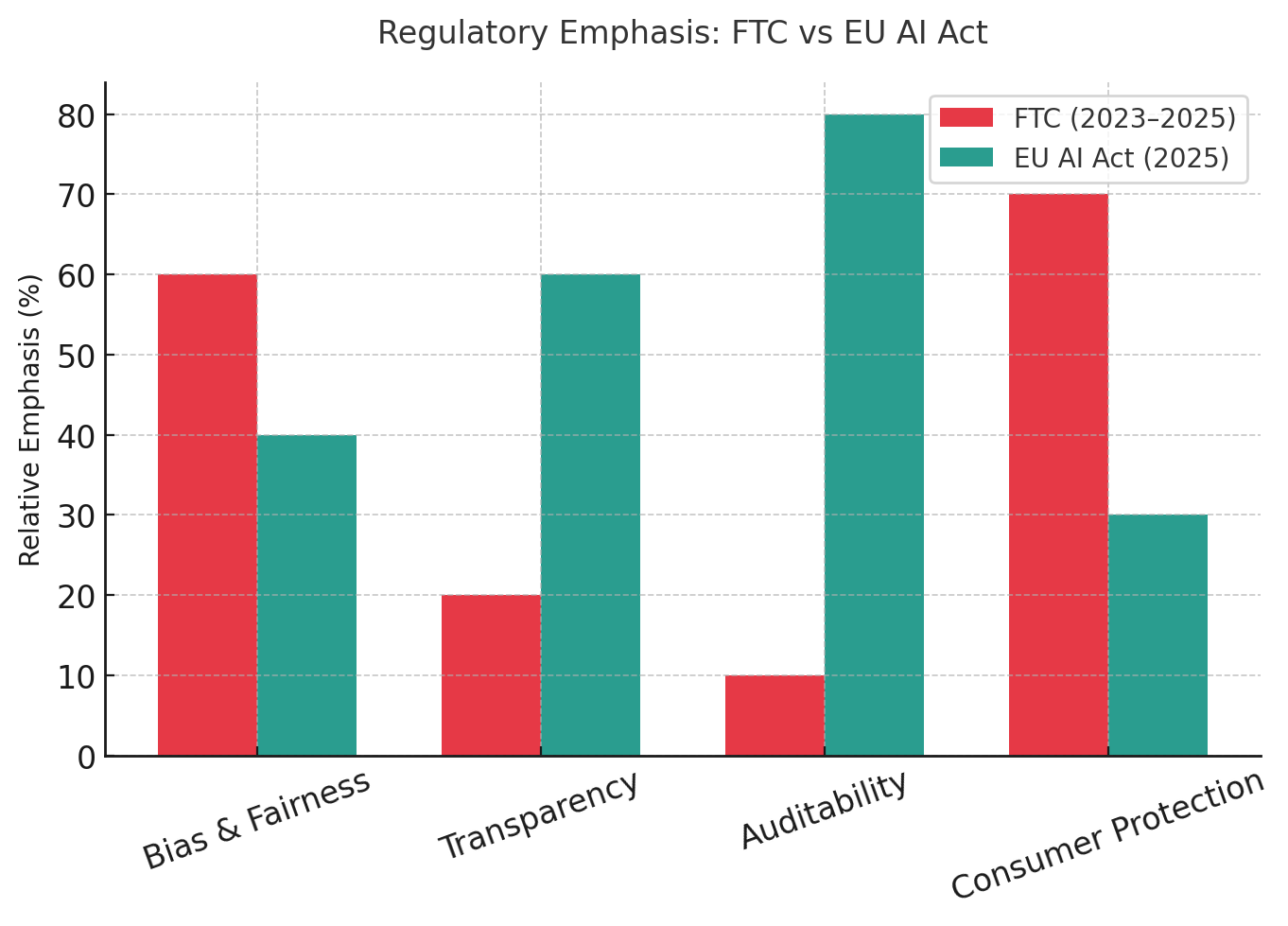

The next 12 to 24 months constitute a critical window. Regulatory scrutiny is increasing even as enforcement retreats in some jurisdictions. The EU AI Act emphasizes transparency and human oversight for high-risk AI, which translates to requirements for auditability of AI decisions and proper access controls. Financial regulators are likely to mandate that autonomous trading or credit decisions can be traced to specific human supervisors or documented policies. Privacy regulations (GDPR, HIPAA) increasingly demand logging of AI access to personal data and clear chains of authority for automated decisions.

The operational imperative starts with comprehensive inventory. One survey found that 91% of organizations use AI agents, yet only 10% have a strategy for managing these identities. Shadow AI deployments, where business units spin up agents outside IT visibility, create unmapped attack surface. The inventory must cover every AI agent, API, service account, and automated workflow. Each must have a unique identity rather than sharing human credentials or generic service accounts.

Enforcement means shifting to ephemeral, least-privilege credentials by default. Long-lived static secrets must be phased out in favor of short-lived tokens, certificates, or dynamically generated credentials. Research on AI-driven threat detection for OAuth and SAML systems shows this shift can reduce OAuth token misuse by 80% and improve SAML forgery detection by 90%.

Practical implementation involves integration with CI/CD pipelines so that when a developer deploys a service or triggers a workflow, the system automatically generates an identity, assigns appropriate scoped access, and begins logging activity. When the workflow completes or the service terminates, credentials are revoked immediately.

Automation is the only viable path at scale. Manual identity lifecycle management fails at the volume and velocity of AI workflows. Secret vaults and key management systems must store and distribute all agent credentials, with hardcoded secrets treated as critical vulnerabilities.

Zero Trust principles must extend to every AI workload. Context-aware session monitoring should evaluate whether an identity is operating from the expected environment and whether behavior falls within normal bounds. Technologies like Identity Threat Detection and Response can spot anomalous identity usage patterns in real time.

Monitoring and audit trails require comprehensive coverage. Every action by AI agents should be logged with sufficient detail to answer: which identity, what action, which data, when, and on whose behalf. These logs must feed into SIEM and XDR pipelines with detection rules updated to include machine identity patterns.

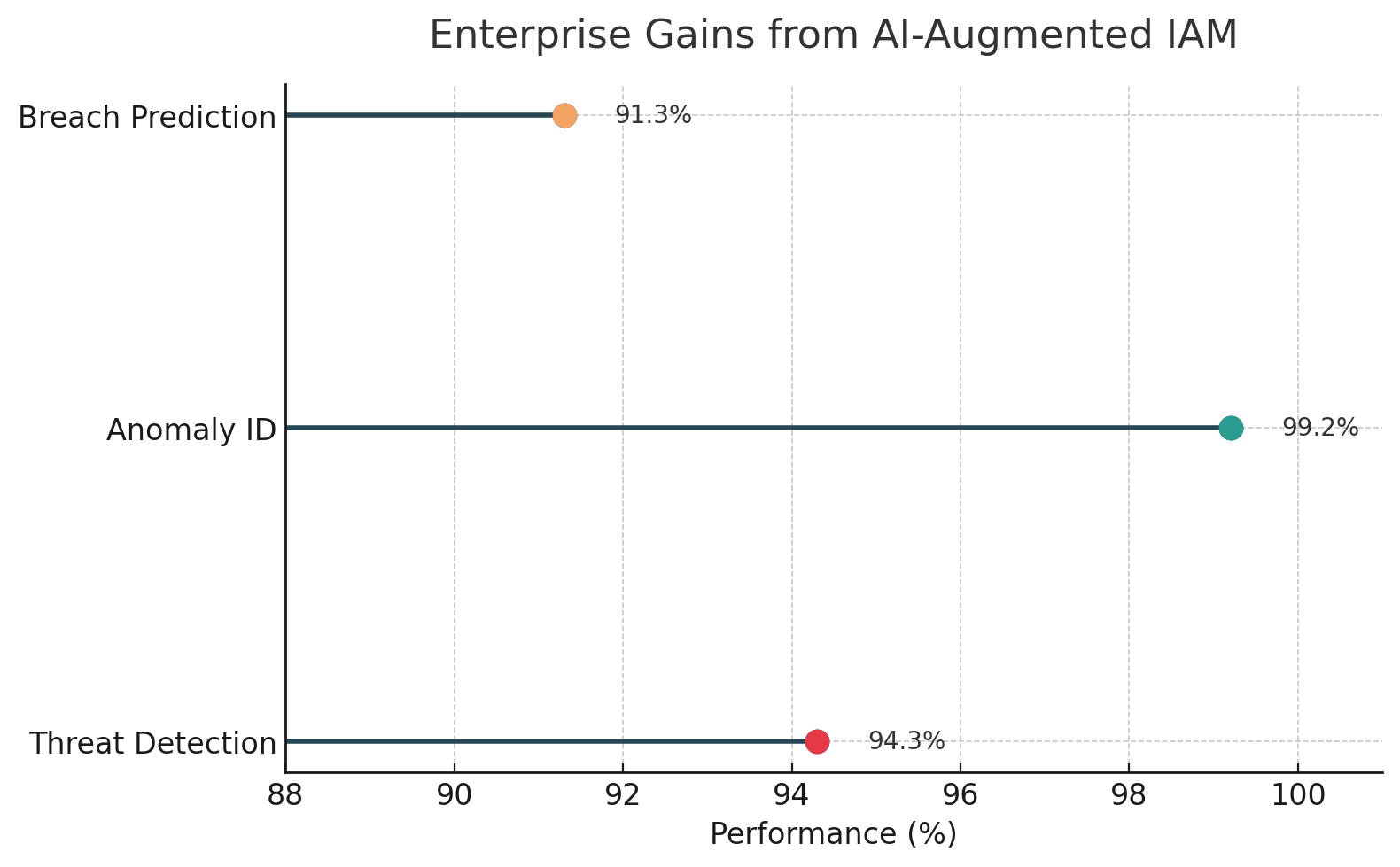

A systematic review of 25 empirical studies on session-level identity tracking technologies in AI-enabled business processes examined quantitative outcomes where organizations implemented advanced controls. The results varied significantly across implementations and technical stacks, and the review noted important limitations: lack of universal reporting on statistical significance, varying methodologies across studies, and challenges in cross-study comparability. However, directional findings were consistent.

One enterprise implementation of SAP Business Technology Platform with AI-augmented identity and access management reported threat detection accuracy of 94.3%, anomaly identification at 99.2%, and breach prediction at 91.3%. Security incidents declined 78.5%, policy inconsistencies fell 94.2%, mean time to detect dropped from 197 hours to 15 hours, and mean time to respond dropped from 4.2 hours to 18 minutes. These represent results from a specific enterprise deployment, not universal benchmarks.

A FinTech implementation using predictive threat analysis with adaptive learning and blockchain components reported fraud reduction up to 85%, false positives down 30%, risk analysis time reduced 60%, and authentication success rates at 95%. Account takeover incidents fell 70%. A different study examining hybrid deep learning for identity and access management anomaly detection achieved 85.44% accuracy, 87.95% precision, and 85.44% recall using convolutional neural network and long short-term memory approaches.

Machine learning implementations for access control in enterprise settings achieved 95% accuracy with 0.15 second response times. An AI-driven Zero Trust implementation for Kubernetes and multi-cloud deployments showed improved threat detection speed and incident reduction, though without specific percentages reported. AI-driven threat segmentation in Zero Trust architectures showed improved detection and categorization with enhanced detection of insider threats, again without specific quantitative measures.

The systematic review concluded that advanced session-level identity tracking technologies, particularly those leveraging AI and machine learning for user behavior analysis and real-time contextual monitoring, are associated with reductions in security vulnerabilities in AI-enabled business processes. Where quantitative improvements were reported, they were substantial, but the strength of this conclusion is limited by quality and consistency of reporting across studies.

The Vendor Pivot

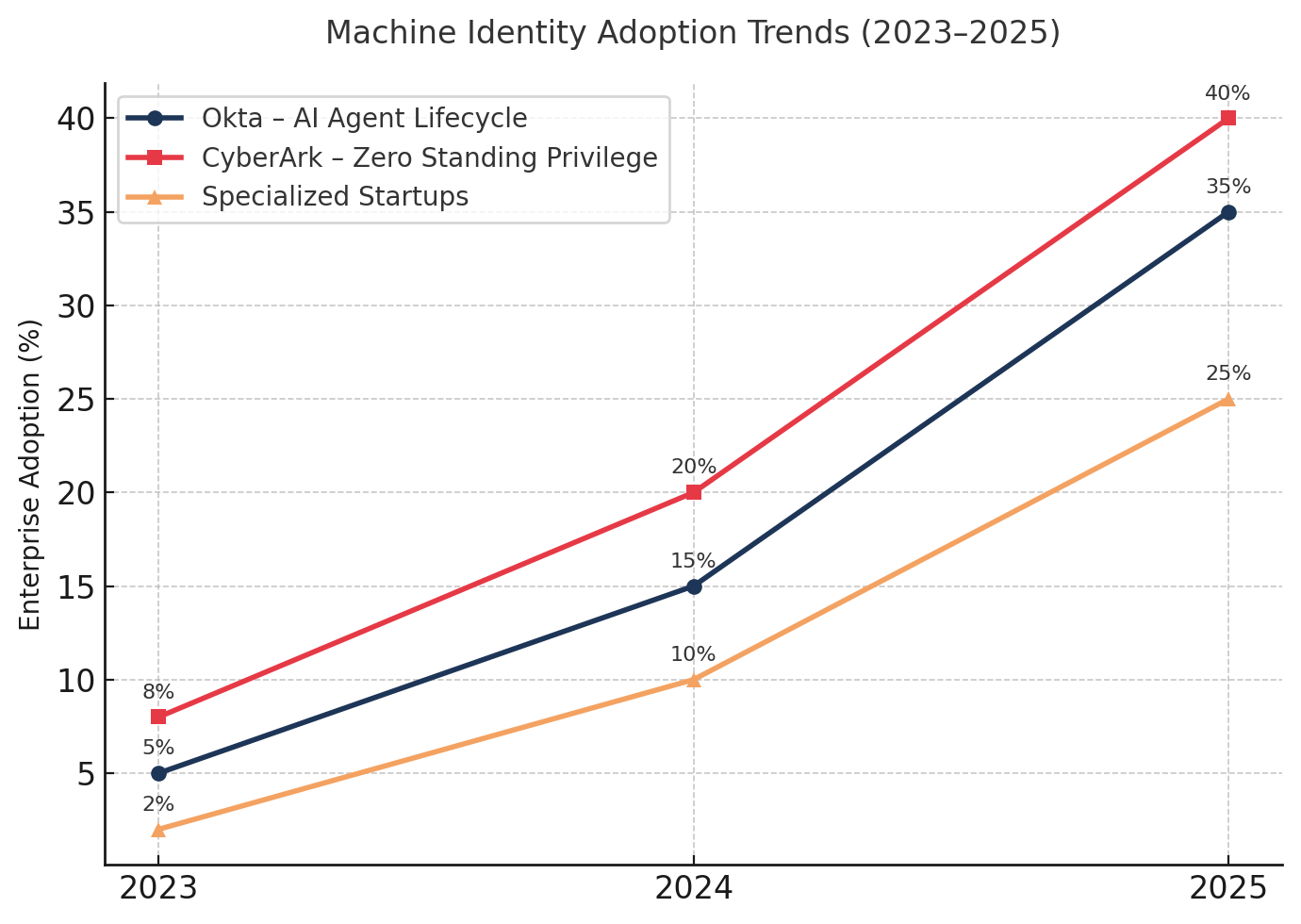

The identity security vendor landscape is undergoing rapid transformation. Traditional IAM providers are extending platforms to handle autonomous agents. Okta introduced "Okta for AI Agents" in September 2025, offering lifecycle management, policy enforcement, and discovery of rogue agents integrated into its identity fabric. CyberArk has emphasized "zero standing privilege" architectures that align with ephemeral credential issuance for both administrators and machines.

Startups have emerged focused specifically on machine identity: Aembit for workload IAM, Strata for agent identity orchestration, and others offering secretless authentication and policy engines for ephemeral access. A new protocol called Cross-App Access (XAA) has backing from multiple vendors to secure agent-to-app interactions with granular controls tailored for AI workflows, providing real-time visibility and policy-driven security.

But vendor claims require scrutiny. The market is crowded with "AI-powered" solutions. CISOs should evaluate vendors on three criteria: the problem (does the solution require AI to solve a genuine identity challenge like behavioral baselining and intelligent anomaly detection), the proof (can it manage and audit thousands of short-lived sessions daily with standards like SPIFFE/SVID), and the practicality (does it cover cloud, SaaS, on-premise, and CI/CD environments or just cloud infrastructure).

Cloud Infrastructure Entitlement Management (CIEM) tools, while necessary, are insufficient alone. CIEM is inherently limited in scope to cloud infrastructure and often fails to address security challenges posed by non-human identities in hybrid environments, SaaS applications, on-premise systems, and critical CI/CD pipelines. Organizations need terrain-agnostic Non-Human Identity Security that provides unified visibility across all environments.

The Regulatory Landscape

The Trump administration's AI Action Plan, released in 2025, signals a sharp shift away from the enforcement approach taken under the Biden FTC. The plan promises to review all FTC actions from the previous administration to ensure they do not "unduly burden AI innovation" and threatens to withhold federal AI funding from states with burdensome regulations.

This represents a regulatory retreat at a moment when technical risks are escalating. The FTC under Lina Khan prosecuted cases where AI companies either made false claims about their technology or deployed systems that caused measurable harm. The Evolv case involved AI security systems that failed to detect weapons used in actual stabbings. The Intellivision case challenged unfounded claims about bias-free facial recognition. The Rite Aid case responded to documented harm against customers falsely accused based on AI errors.

These enforcement actions imposed modest financial penalties but established important precedent: organizations are liable for the accuracy and impact of their AI systems, not just their marketing claims. The Trump administration's plan to review and potentially repeal such actions removes a significant accountability mechanism.

Leah Frazier, who worked at the FTC for 17 years and served as Khan's advisor, distinguishes between two types of FTC AI cases: deception cases (where companies mislead consumers) and responsible use cases (where companies deploy AI in ways that harm people). The deception cases enjoyed bipartisan support. The responsible use cases, particularly those involving testing AI for bias, appear likely to disappear under the new administration.

The practical effect will be faster AI deployment with less federal oversight of accuracy, fairness, or consumer protection. For identity security, this increases the stakes for CISOs. Regulatory pressure that might have forced better controls on AI agent identity and accountability is diminishing at the federal level. The burden shifts to internal governance and state-level regulation.

At the same time, other regulatory frameworks continue evolving. Privacy regulations will increasingly demand clear auditability and explainability for identity and access decisions made by autonomous agents, especially those handling delegated human authority. The compliance challenge is acute: demonstrating that AI agent actions comply with data minimization, purpose limitation, and accountability principles when those agents operate autonomously at machine speed.

The Path Forward

Session-level identity in AI-driven workflows represents a structural crisis that most organizations are addressing inadequately. The evidence is quantitative: 91% of organizations experienced identity incidents in 2024, attackers captured 895,802 credentials for enterprise AI platforms, and machine identities outnumber human identities by up to 50 to 1 in modern enterprises.

The mismatch between AI operational requirements and identity infrastructure capabilities creates vulnerabilities that attackers are actively exploiting. Traditional perimeter defenses and user-centric IAM fail against machine-speed lateral movement using compromised or hijacked agent sessions.

The solution path is clear but demanding: comprehensive identity inventory including all non-human entities, automated lifecycle management with ephemeral credentials as default, just-in-time access with automatic revocation, context-aware policy enforcement, continuous behavioral monitoring, and unified visibility across hybrid environments.

The investment is substantial. It requires technology upgrades, process redesign, and cultural change across security, IT, and business units. But the alternative is continuing to operate AI workflows with identity controls designed for an obsolete threat model, accumulating security debt that compounds with every new agent deployment.

The window for proactive action is narrowing. Attackers have adapted their techniques to target session-level vulnerabilities. Regulatory expectations vary by jurisdiction but trend toward greater accountability requirements. The enterprises that move first to establish robust identity governance for AI agents will build competitive advantage through both security resilience and operational efficiency. Those that delay will find themselves responding to breaches, enforcement actions, and business disruption that could have been prevented.

The session has become the critical security boundary. Whether organizations secure it will determine which survive the next wave of identity-based attacks.

Stay safe, stay secure.

The CybersecurityHQ Team

Reply