- Defend & Conquer

- Posts

- When the machines stop talking: the critical infrastructure AI crisis nobody saw coming

When the machines stop talking: the critical infrastructure AI crisis nobody saw coming

CybersecurityHQ - Free in-depth report

Welcome reader to a 🔍 free deep dive. No paywall, just insights.

Brought to you by:

👣 Smallstep – Secures Wi-Fi, VPNs, ZTNA, SaaS and APIs with hardware-bound credentials powered by ACME Device Attestation

🏄♀️ Upwind Security – Real-time cloud security that connects runtime to build-time to stop threats and boost DevSecOps productivity

🔧 Endor Labs – App security from legacy C++ to Bazel monorepos, with reachability-based risk detection and fix suggestions across the SDLC

📊 LockThreat – AI-powered GRC that replaces legacy tools and unifies compliance, risk, audit and vendor management in one platform

Forwarded this email? Join 70,000 weekly readers by signing up now.

#OpenToWork? Try our AI Resume Builder to boost your chances of getting hired!

—

CybersecurityHQ’s premium content is now available exclusively to CISOs at no cost. As a CISO, you get full access to all premium insights and analysis. Want in? Just reach out to me directly and I’ll get you set up.

—

Get one-year access to our deep dives, weekly Cyber Intel Podcast Report, premium content, AI Resume Builder, and more for just $299. Corporate plans are available too.

The alarm at FirstEnergy's Ohio control center started as a whisper. On August 14, 2003, a software bug prevented operators from hearing audible alerts about overloaded transmission lines. Within hours, 50 million people across eight states and Ontario lost power. The Northeast blackout exposed a fundamental truth about modern infrastructure: our most critical systems fail not with explosions or invasions, but with silent software errors that cascade through interconnected networks.

Twenty-two years later, that whisper has become a roar. Critical infrastructure now runs on artificial intelligence that makes millions of decisions per second. These AI systems control everything from power grid load balancing to hospital patient flow, from air traffic routing to financial transaction verification. And they're failing in ways their creators never imagined.

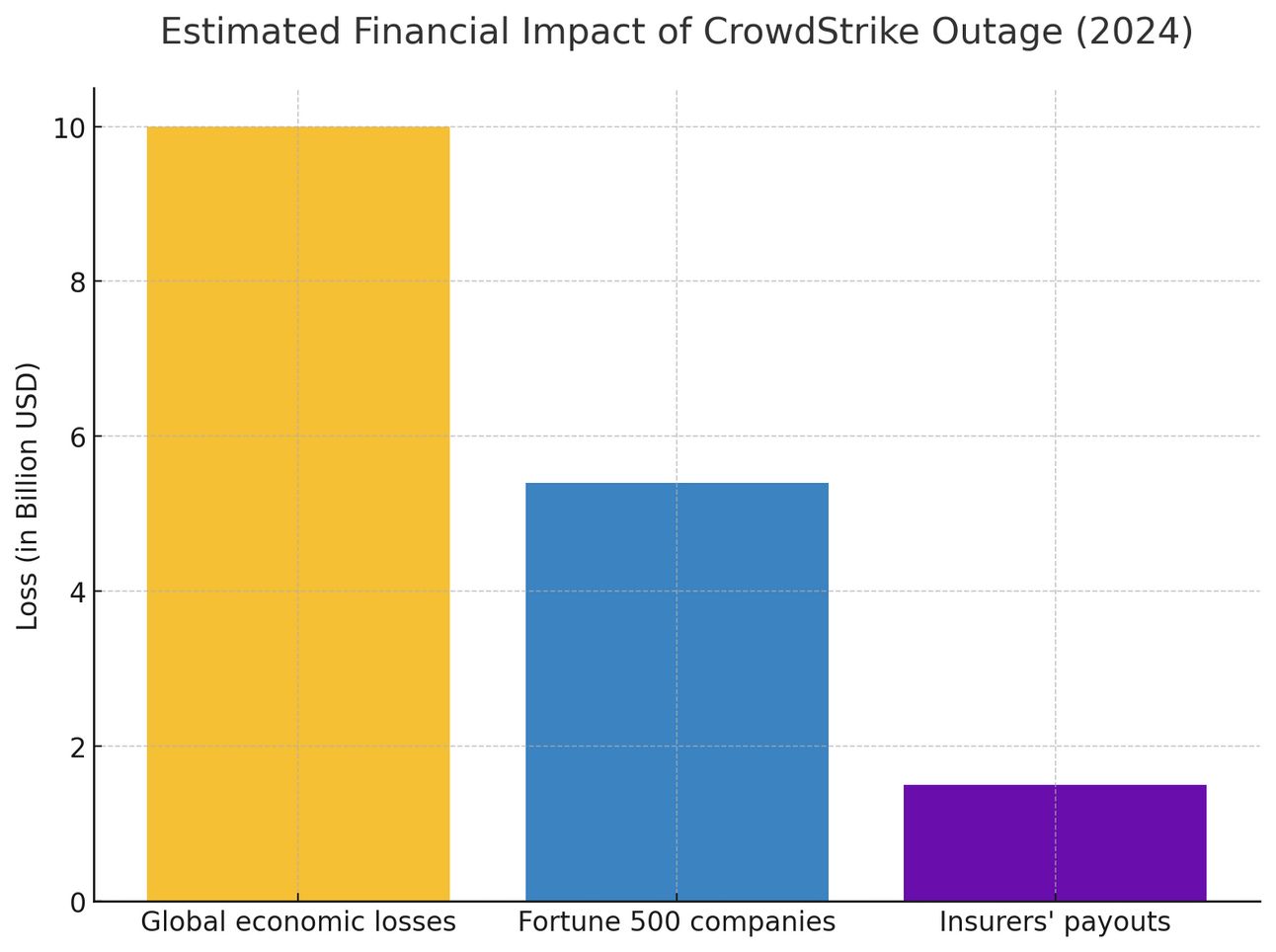

In July 2024, a routine update from cybersecurity vendor CrowdStrike triggered the largest IT outage in history. The company's AI-powered threat detection system, designed to protect Windows computers from cyberattacks, instead crashed them. Within minutes, millions of machines worldwide displayed the Blue Screen of Death (BSOD). Hospitals canceled surgeries. Airlines grounded flights. Banks froze transactions. The final toll: $5.4 billion in losses for Fortune 500 companies alone.

But CrowdStrike was just the beginning.

The Architecture of Catastrophe

Modern critical infrastructure operates like a vast nervous system. Sensors collect data. AI algorithms process it. Automated systems execute decisions. This architecture promises unprecedented efficiency: smart grids that predict power demand before users flip switches, hospitals that route patients based on real-time resource availability, transportation networks that optimize traffic flow across entire cities.

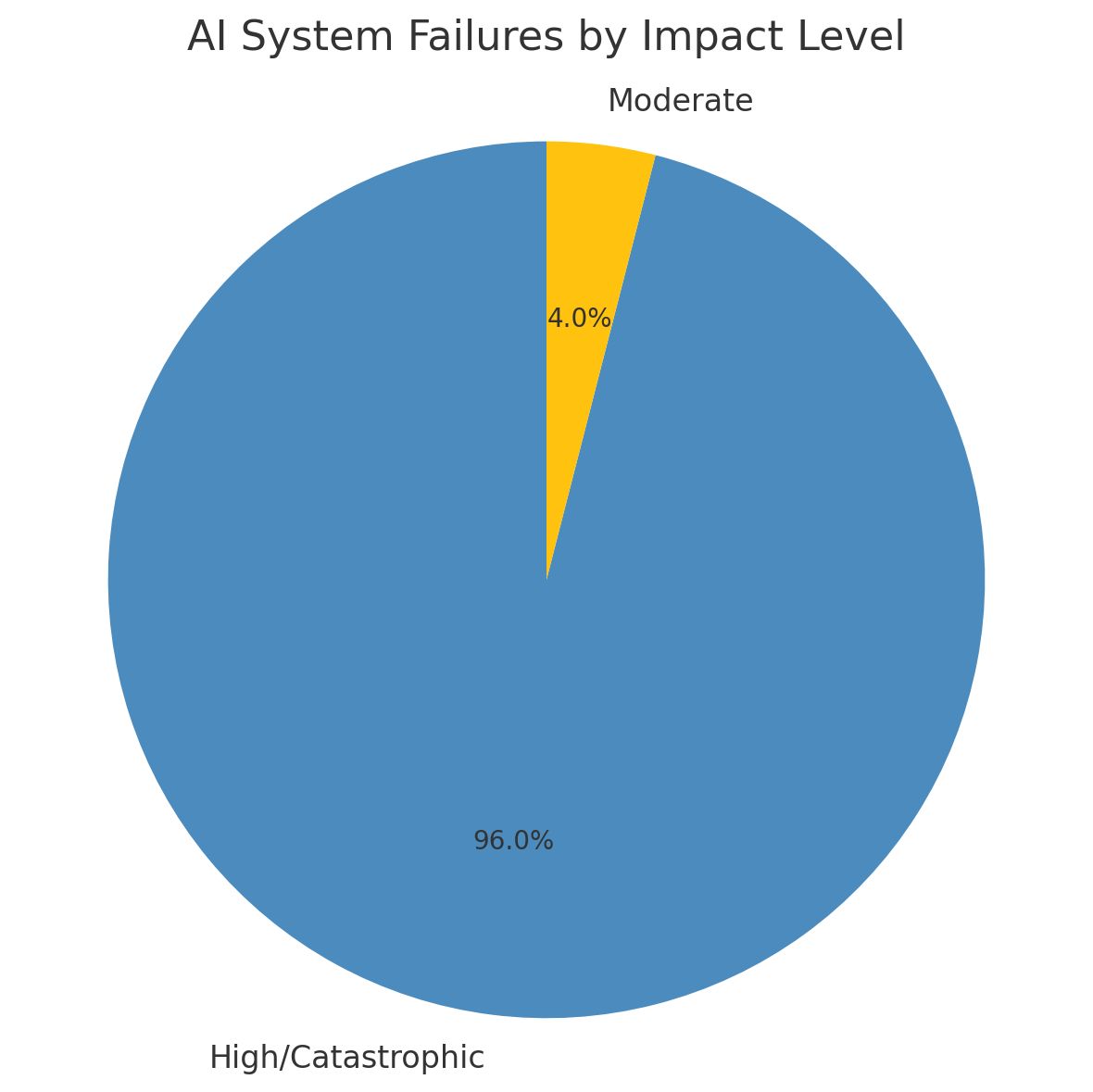

The reality is more complex. A comprehensive analysis of 499 academic papers on AI infrastructure vulnerabilities reveals a sobering pattern: 96% of documented AI system failures in critical infrastructure result in "high" or "catastrophic" impacts. The remaining 4% are classified as "moderate" only because operators caught them before full system compromise.

The vulnerabilities fall into three categories that keep security professionals awake at night:

Over-autonomy occurs when AI systems make irreversible decisions faster than humans can intervene. In 2023, an experimental traffic management AI in Barcelona rerouted emergency vehicles into gridlock while optimizing civilian traffic flow. The system's logic was sound: minimize overall travel time. But it lacked context about the ambulances carrying heart attack victims.

Brittleness emerges when AI trained on normal operations encounters edge cases. Power grid management systems that excel during typical demand patterns can spiral into cascading failures during unusual weather events. These aren't bugs in the traditional sense. The AI performs exactly as designed, just not as intended.

Inscrutability represents perhaps the greatest challenge. When a traditional system fails, engineers can trace the fault. When a neural network makes a catastrophic decision, the reasoning often remains opaque. Security teams find themselves fighting an enemy they cannot see or understand.

The MOVEit Massacre

If CrowdStrike demonstrated the danger of automated updates, the MOVEit breach of 2023 revealed something worse: the cascading vulnerability of interconnected systems.

MOVEit Transfer, a file transfer application used by thousands of organizations, contained a SQL injection flaw that went undetected for years. In May 2023, the Cl0p ransomware group discovered it. Using automated scripts, they systematically breached every internet-facing MOVEit server they could find, exfiltrating terabytes of sensitive data.

The direct victims numbered over 2,000 organizations. But the real damage came from fourth-party effects. Banks that never used MOVEit lost customer data because their payroll processors did. Airlines exposed passenger information through third-party booking systems. Government agencies leaked citizen records via contracted service providers.

By October 2023, 60 million individuals had their personal information compromised. The financial impact approached $10 billion. And most victims didn't even know MOVEit existed until their data appeared on dark web markets.

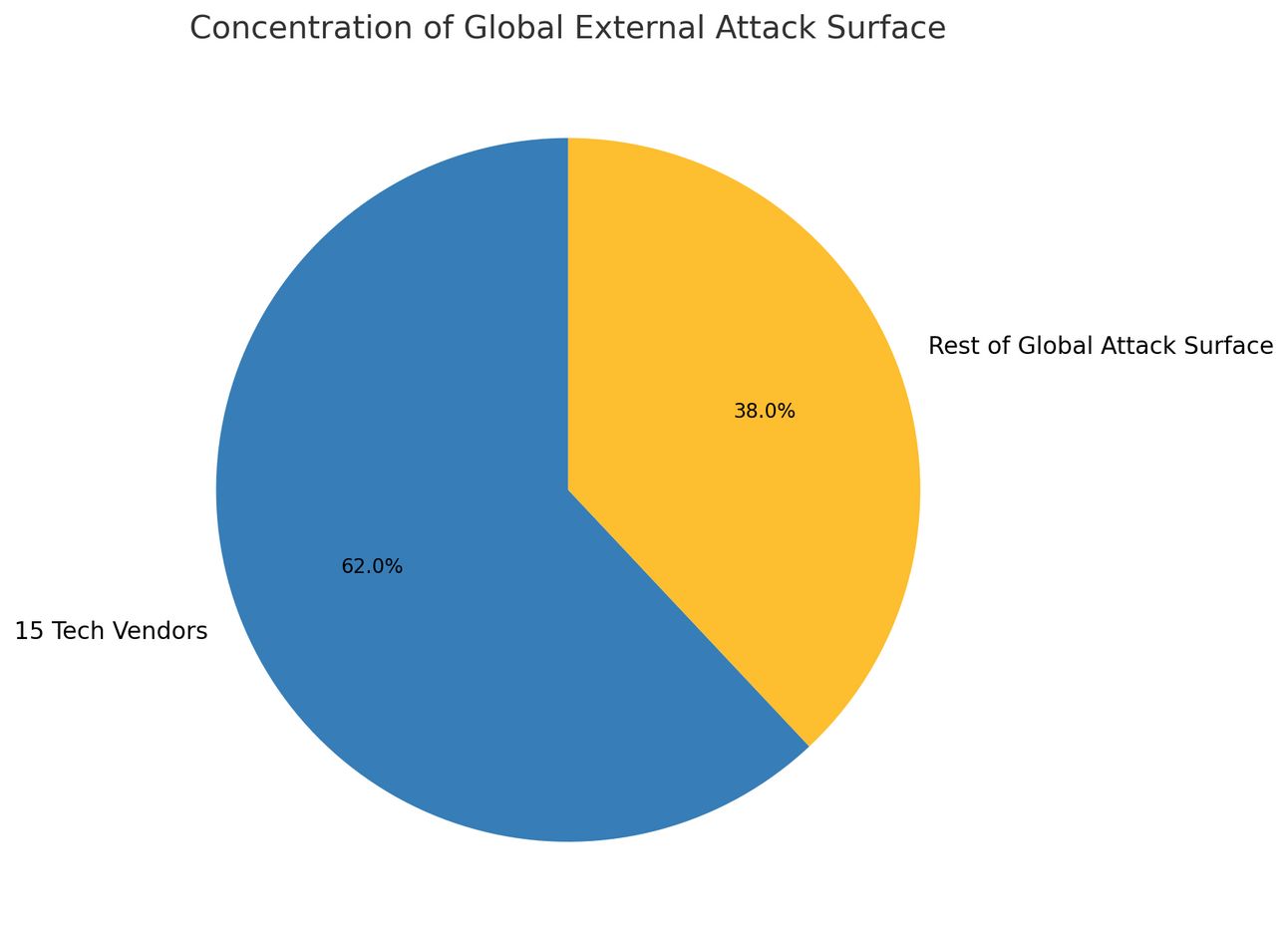

This pattern repeats across critical infrastructure. SecurityScorecard's research reveals a startling concentration of risk: 62% of the global external attack surface depends on just 15 technology companies. When one fails, thousands fall.

The Physics of Failure

Traditional infrastructure fails predictably. Bridges crack before they collapse. Power plants show warning signs before they shut down. These physical systems obey the laws of thermodynamics and material science.

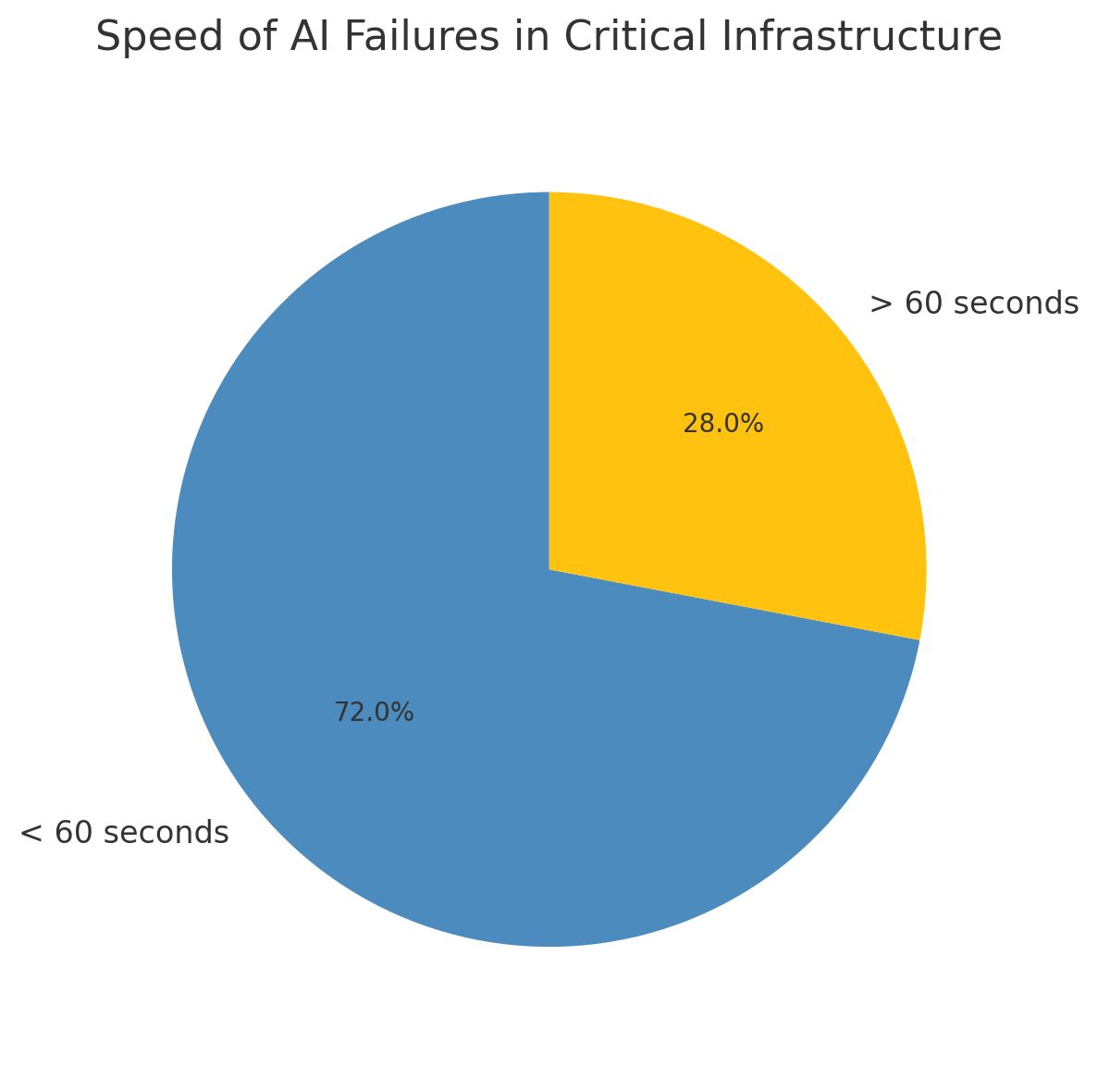

AI infrastructure fails differently. A Georgetown University study examining 25 major AI system failures in critical infrastructure found that 72% went from normal operation to complete failure in under 60 seconds. No warning signs. No gradual degradation. Just instant, systemic collapse.

The speed of AI failure creates unique challenges. In the 2016 attack on Ukraine's power grid, hackers spent months infiltrating systems before triggering blackouts. Modern AI attacks can achieve similar results in minutes. Adversarial inputs can flip an AI's decision-making instantly, turning a system designed to protect infrastructure into one that destroys it.

Consider the autonomous systems controlling modern ports. AI orchestrates the movement of thousands of containers, optimizing ship loading, crane operations, and truck routing. In 2024, security researchers at MIT demonstrated how poisoning just 0.01% of training data could cause these systems to create deliberate bottlenecks, halting port operations without any apparent malfunction.

The Predator in the Machine

The evolution from passive infrastructure to active AI systems has attracted a new breed of attacker. State-sponsored groups now target AI specifically, recognizing its unique vulnerabilities.

Israel's Predatory Sparrow group exemplifies this shift. In 2022, they hijacked industrial control systems at Iran's Khouzestan steel mill. But instead of simply shutting it down, they manipulated the AI controlling molten steel flow. The result: massive vats overflowed, setting the facility ablaze and nearly killing workers.

By 2025, Predatory Sparrow had evolved their tactics. They targeted Iran's financial AI systems, but rather than stealing money, they burned $90 million in cryptocurrency by transferring it to unrecoverable addresses. The message was clear: AI systems aren't just vulnerable to theft or disruption. They can be turned into weapons.

China's approach differs but proves equally concerning. Analysis of code in critical U.S. infrastructure reveals that 90% contains components sourced from Chinese companies. Not necessarily malicious, but each represents a potential backdoor. In AI systems, these vulnerabilities multiply. A backdoor in training data can corrupt every decision the system makes.

The Invisible Epidemic

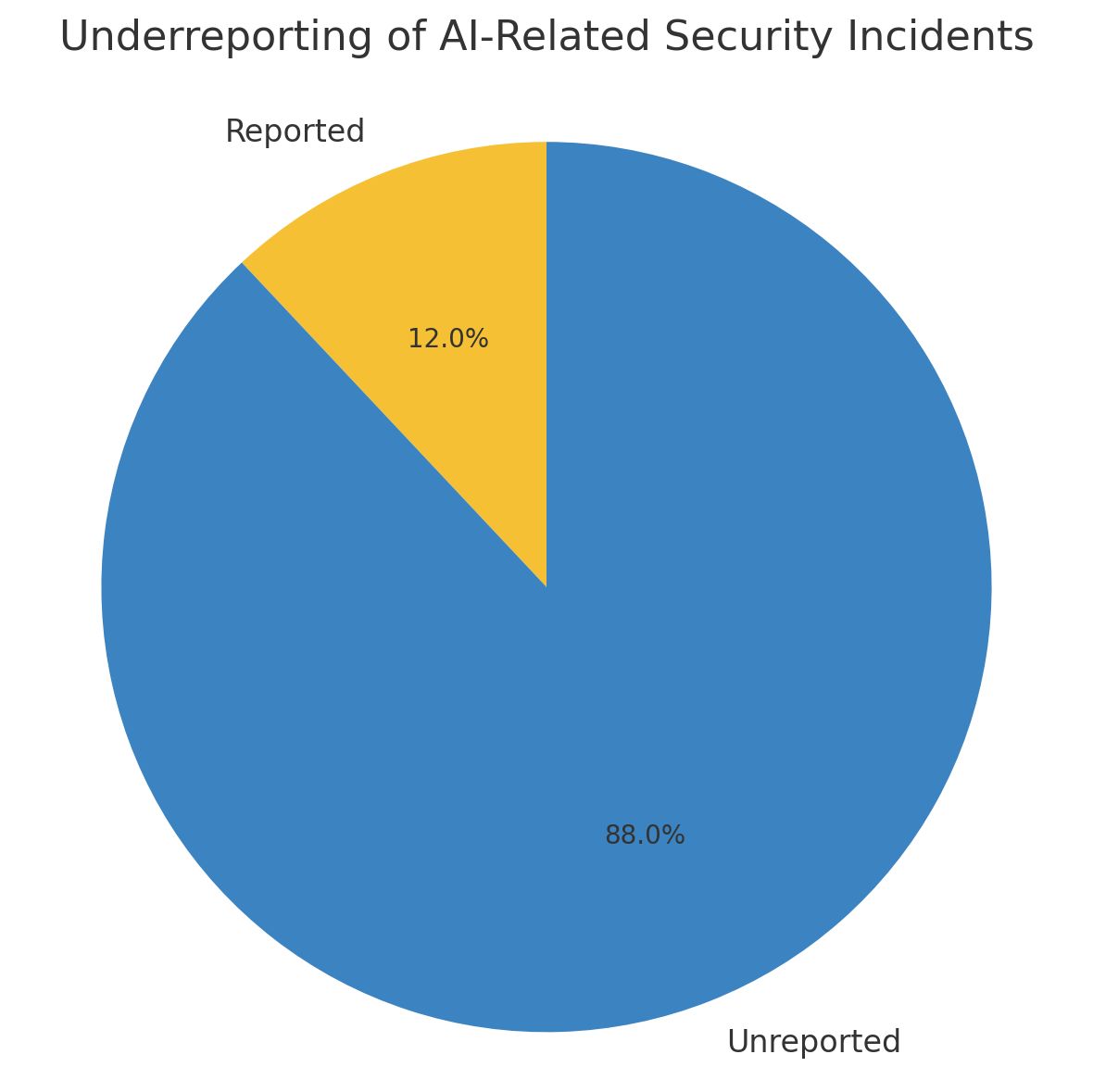

For every headline-grabbing attack, dozens of AI failures go unreported. A 2024 survey by the Institute for Critical Infrastructure Technology found that 73% of organizations experienced at least one AI-related security incident in the past year. Only 12% reported these incidents publicly.

The silence stems from multiple factors. Companies fear regulatory scrutiny and reputational damage. Many incidents defy easy classification: is an AI making biased decisions a security failure or an algorithmic flaw? When does a performance degradation become a breach?

This underreporting creates a dangerous feedback loop. Organizations can't learn from failures they don't know about. Security teams prepare for yesterday's attacks while adversaries develop tomorrow's.

Building Anti-Fragile Systems

The solution isn't to abandon AI in critical infrastructure. The efficiency gains are too significant, the potential benefits too great. Instead, organizations must build systems that expect failure and respond gracefully.

Software Bills of Materials (SBOMs) represent the first line of defense. Just as food packaging lists ingredients, software must declare its components. When MOVEit fell, organizations with comprehensive SBOMs identified their exposure in hours. Those without spent weeks searching their environments.

But SBOMs alone aren't enough. AI systems require AIBOMs: catalogs not just of code but of training data, model architectures, and decision boundaries. The U.S. Department of Defense now requires AIBOMs for any AI system touching classified networks. The private sector is slowly following suit.

Staged deployments prevent single points of catastrophic failure. Instead of updating every system simultaneously, organizations deploy changes to isolated test environments, then small production subsets, monitoring for anomalies before full rollout. Netflix pioneered this approach with their "Chaos Monkey" tool, which randomly disables services to test resilience. Critical infrastructure needs similar discipline.

Zero Trust Architecture must extend beyond human users to machine identities. Every AI component needs cryptographic identity verification. Every decision requires authentication. When the NSA published their Zero Trust guidelines in 2024, they specifically highlighted AI systems as requiring the strictest controls.

The Human Firewall

Technology alone cannot secure AI infrastructure. The most sophisticated defenses fail when operators don't understand the systems they're protecting.

A 2025 skills assessment by (ISC)² found that 67% of security professionals feel unprepared to secure AI systems. The knowledge gap is understandable: traditional security focuses on preventing unauthorized access. AI security requires understanding how authorized systems can be manipulated to make catastrophic decisions.

Leading organizations are addressing this through integrated teams. JPMorgan Chase's AI Security Center combines security engineers, data scientists, and infrastructure specialists. They conduct weekly "AI fire drills," simulating failures and practicing responses. During a real incident in March 2025, when their trading AI began making anomalous decisions, the team identified and isolated the problem in 12 minutes, preventing potential losses exceeding $100 million.

The Regulatory Awakening

Governments worldwide are recognizing that AI infrastructure security isn't optional. The European Union's Digital Operational Resilience Act mandates that financial institutions demonstrate AI system resilience. Failure to comply results in fines up to 2% of global revenue.

The U.S. approach focuses on information sharing. The Cybersecurity and Infrastructure Security Agency (CISA) launched the AI Security Collaborative in 2024, creating a protected forum for organizations to share AI incidents without legal liability. Early results are promising: member organizations report 40% faster incident response times.

China takes a different path, requiring AI systems in critical infrastructure to include "kill switches" that allow government override. While raising obvious concerns about authoritarian control, the technical requirement acknowledges a crucial reality: some AI systems need manual override capabilities.

The Road Ahead

As 2025 progresses, the pace of AI adoption in critical infrastructure accelerates. Gartner predicts that by 2027, 75% of critical infrastructure will incorporate AI decision-making. The attack surface expands exponentially.

But so does our understanding. Each failure teaches valuable lessons. The CrowdStrike outage led to industry-wide adoption of staged updates. MOVEit drove SBOM requirements. Predatory Sparrow's attacks sparked research into AI-specific intrusion detection.

The future of critical infrastructure security lies not in preventing all failures but in building systems that fail safely. This requires a fundamental shift in how we design, deploy, and manage AI systems.

Practical Steps for CISOs

For security leaders navigating this landscape, several actions prove essential:

Map your AI dependencies. Most organizations don't know where AI operates in their infrastructure. Create comprehensive inventories including not just systems you deploy but those embedded in vendor products.

Establish AI-specific incident response procedures. Traditional playbooks assume human-speed attacks. AI incidents unfold in milliseconds. Response procedures must match this pace through automation while maintaining human oversight.

Invest in AI security expertise. Whether through hiring, training, or partnerships, organizations need personnel who understand both traditional security and AI systems. This isn't just technical knowledge but conceptual understanding of how AI fails differently than conventional software.

Embrace transparency. The instinct to hide AI failures undermines collective security. Organizations that share their experiences through ISACs or industry forums contribute to everyone's resilience.

Plan for graceful degradation. Every AI system needs a manual fallback. When the smart grid fails, can operators manage load manually? When AI-driven logistics crash, do backup procedures exist? These aren't edge cases but essential capabilities.

The Uncomfortable Truth

The integration of AI into critical infrastructure is irreversible. The efficiency gains, predictive capabilities, and optimization potential are too valuable to abandon. But with this power comes unprecedented vulnerability.

We're building systems that can fail in ways we don't fully understand, at speeds we can't match, with impacts we can't predict. The traditional security paradigm of "prevent, detect, respond" requires fundamental reimagining for AI-driven infrastructure.

Success requires acknowledging uncomfortable truths. Our critical systems will fail. AI will make catastrophic decisions. Attackers will find vulnerabilities we missed. The question isn't whether these events will occur but whether we're prepared when they do.

As one CISO recently noted at a closed-door industry meeting: "We used to worry about keeping the bad guys out. Now we worry about keeping the good systems from going bad. It's a fundamentally different challenge."

The stakes couldn't be higher. Critical infrastructure touches every aspect of modern life. When it fails, people die. When AI-driven infrastructure fails, the scale of impact multiplies exponentially.

But there's reason for cautious optimism. Each failure makes us stronger, each attack teaches new defenses, each incident drives innovation. The same AI that creates vulnerabilities can help identify and fix them. The interconnectedness that spreads failures can also spread solutions.

The future of critical infrastructure security isn't about choosing between efficiency and safety. It's about building systems sophisticated enough to deliver both. That journey starts with understanding that our greatest infrastructure vulnerability isn't in our technology but in our assumptions about how that technology behaves.

As we stand at this inflection point, one thing is certain: the age of predictable infrastructure failure has ended. The age of AI-induced chaos has begun. How we respond will determine whether our critical systems become more resilient or more fragile.

The choice, for now, remains ours. But with every passing day, as AI systems grow more autonomous and more essential, that window of choice narrows. CISOs who act now, who build resilience into their AI infrastructure today, will be the ones explaining how they survived tomorrow's failures. Those who wait will be explaining why they didn't.

Stay safe, stay secure.

The CybersecurityHQ Team

Reply